Alison Dennis is a London-based partner with law firm Taylor Wessing, where she co-heads the international life sciences team specializing in regulatory and transactional representation. She works with pharmaceutical and medical device manufacturers to ensure product offerings meet regulatory requirements, and negotiates regularly with regulators.

If you're like me, when you fall ill, you will often resort to at least one of two options: consulting a doctor or checking your symptoms online. The former can be expensive or time-consuming, the latter inaccurate and unreliable. What if there were a middle road, where you could report your symptoms to an AI chatbot that could give you a diagnosis? Would you trust its answer? If so, would you trust it more or less than you would trust a doctor? These tools already exist and are rapidly developing in sophistication, know-how and accuracy.

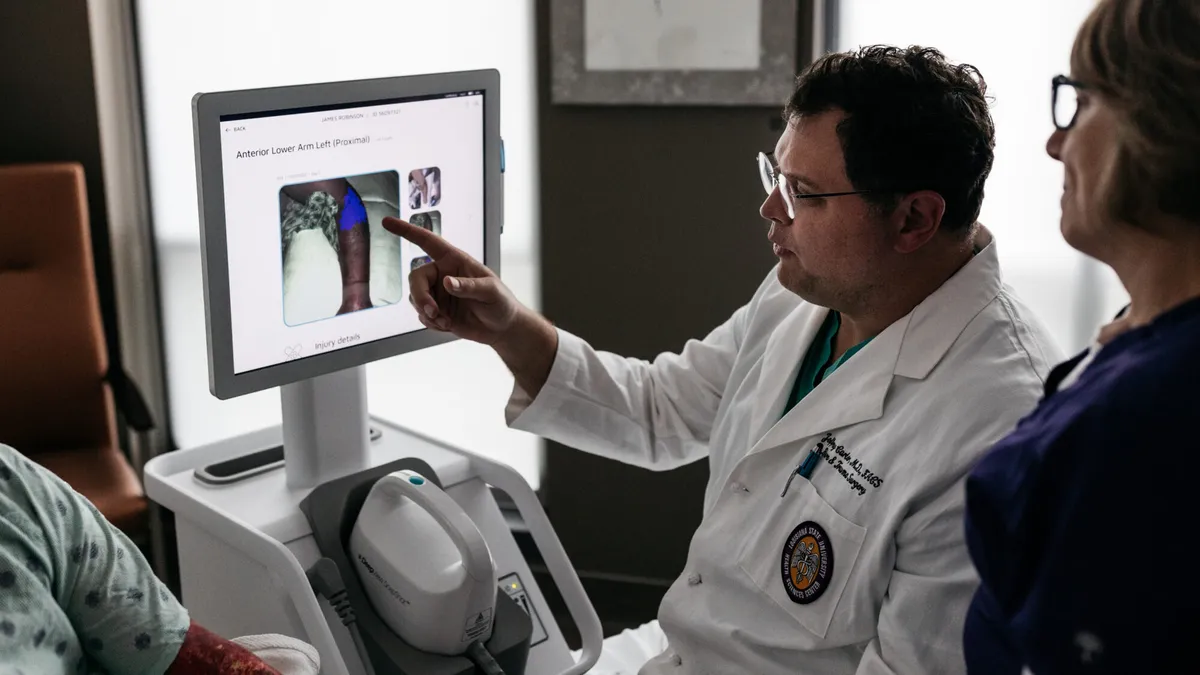

This is just one example of the many ways in which AI as a medical device will become ingrained in our lives. One of the key non-technical barriers to adoption of AI medical devices is user trust. As the adage goes, trust is hard to earn but easy to lose. Most people will trust their doctor, yet be aware that healthcare professionals are human and so will inevitably make mistakes. Ironically, it is this humanity which generates trust: These are individuals you can look in the eye and build relations with.

Not so with AI (or not yet, in any case). Beyond its programming, AI will display no empathy, morality or concern for outcomes. The consequence is that although AI as a medical device has the potential to be far more accurate than a human physician, the public will be less forgiving of any errors that do occur.

Laying the basis for safety and trust

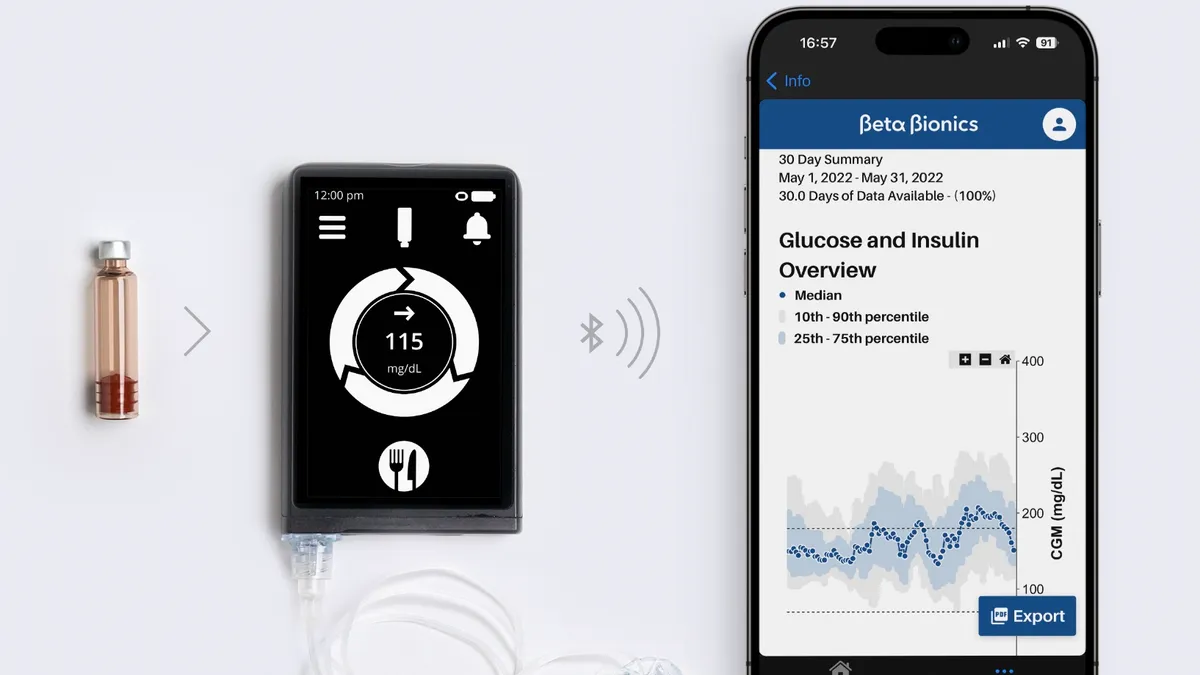

The foundation blocks for ensuring a high degree of safety, leading to a trusting public, will have to be laid by regulators. Regulation will have to span and classify the spectrum of medical devices, from basic AI-enabled smart wearables with a clinical purpose – such as checking for an irregular heartbeat – to autonomous surgical robots. The detail of the regulation should clearly set thresholds that demarcate increasingly rigorous standards proportionate to the device's risks.

The healthcare industry will need to educate consumers and physicians on the benefits of AI devices and how to use them optimally to obtain the claimed benefits.

Manufacturers will need to educate through explanatory notes, FAQs and video demonstrations to give sufficient assurances that their products are acceptably safe, and function as intended. They should also take responsibility for their products, offering customers clear routes to recourse in case of issues. For most, trust will require proof, and seeing is believing.

Separately, manufacturers will need to demonstrate to regulators the safety, effectiveness and quality of the device before it can be placed on the market. When providing their technical files to the regulator, manufacturers will likely need to build in a technical means for understanding mechanisms, validation and verification, while also noting changes made via AI to the operation of the device.

Will patients and users trust technology once they have received support to understand it? Returning to our diagnostic chatbot example, patients might feel reassured and more comfortable using a device if it includes an explanation of its sources, the degree to which a patient’s symptoms match the diagnosis, and the percentage likelihood that an alternative diagnosis may be correct.

Don’t be a Magic 8 Ball

To build patient trust, the device might also explain, for example, how the AI was trained on real-world data, whether the data was reviewed by physicians, and which healthcare authorities have approved the device's use. A 'black box AI' chatbot that produces a response without explaining its operations may resemble the digital equivalent of a Magic 8 Ball.

Manufacturers may wish to go beyond the mandatory requirements and seek additional certification of compliance with international standards or self-certify compliance with good practice principles, such as the ten guiding principles in the Good Machine Learning Practice, agreed between the U.S. Food and Drug Administration, Health Canada and the U.K.'s Medicines and Healthcare products Regulatory Agency.

One of the commonly cited issues with AI in healthcare is the risk of inbuilt bias, which can be caused when non-representative data sets are used to train AI systems. Any evidence of bias will quickly erode hard-won trust.

For example, AI medical devices trained to diagnose disease based on data that does not reflect the ethnic or other diversity of the population could alter the effectiveness of the device on the unrepresented or under-represented segments of the population. In response, regulators will require proof that manufacturers have appropriately identified, measured, managed and mitigated risks arising from any bias.

Supplemental information, such as adherence to stringent cybersecurity standards, maintenance of medical confidentiality and compliance with data processing principles will further reassure patients and users of the manufacturer's commitment to regulatory compliance and high standards, which may further engender trust in the products.

For regulators and manufacturers alike, encouraging trust among healthcare professionals should be an essential target, both in their role as an end-user and as an intermediary with patients. Healthcare professionals will not risk their patients' health, their reputation and possibly their career on untrustworthy technology and so require confidence in the knowledge that they can use and recommend AI medical devices. Gaining the trust of the healthcare community will be a critical stepping stone to achieving a trusting patient population.

As with all new technologies, it is likely that the younger, more 'plugged-in' generation will initially be the early adopters, leading attitudinal change to the acceptability of AI as a medical device. Ultimately, whether an individual decides to trust AI as a medical device, and the extent of that trust, will be decided by personal preference and access to alternatives.

It's clear that AI has the technological potential for huge advances, but its success within healthcare will be determined by the ability of the regulatory environment to meet the challenges of a constantly self-developing product, in order to ensure that public trust advances at the same speed.