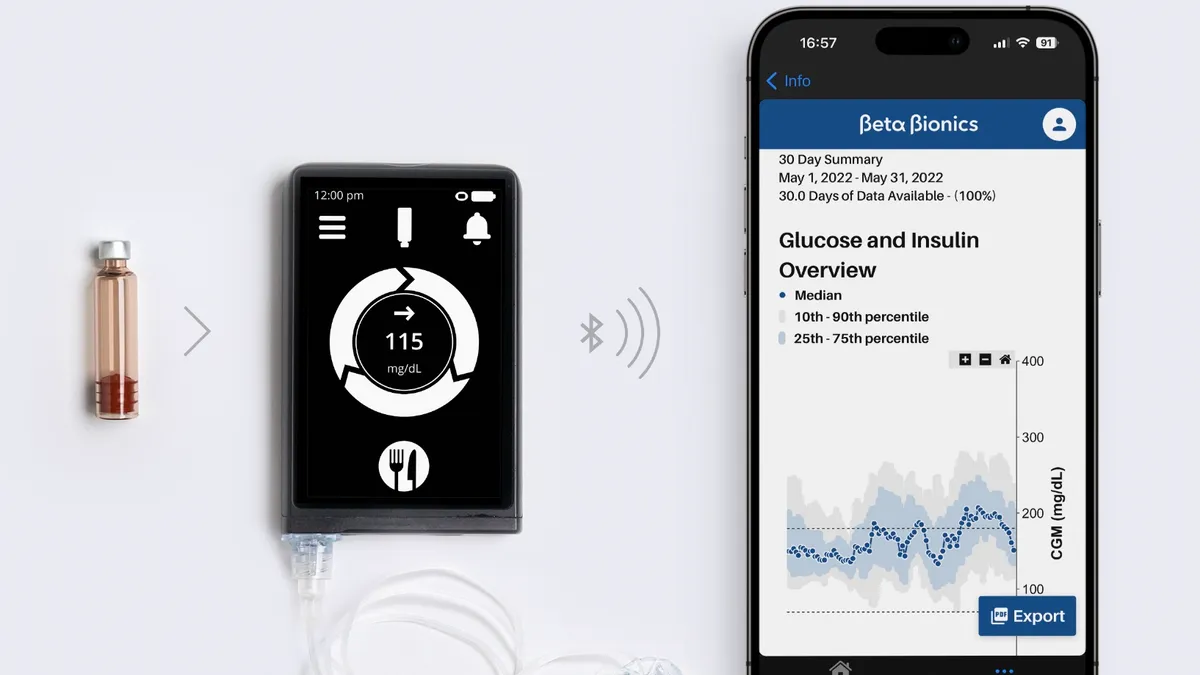

The Food and Drug Administration is grappling with a surge in the number of medical devices that contain artificial intelligence or machine learning features. The agency had authorized 882 AI/ML-enabled devices as of March, and many other devices include AI features that don’t require regulatory review.

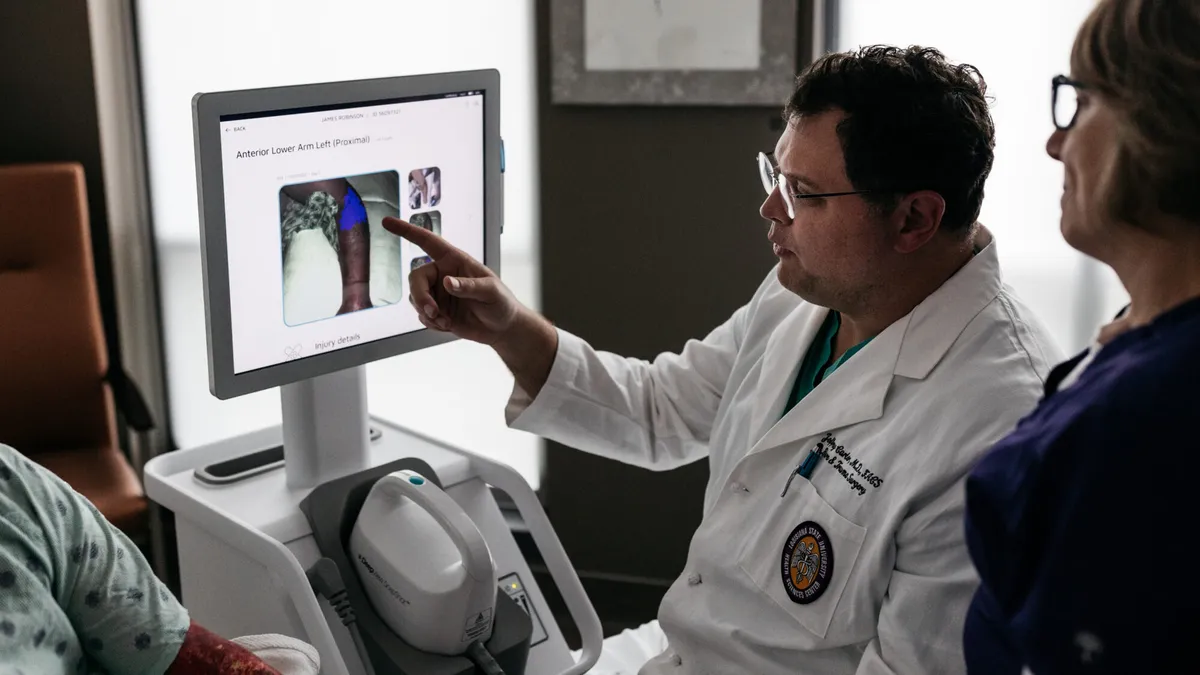

Currently, AI is most often used in medical devices in the radiology field, although the technology is also used in pathology, for appointment scheduling and in clinical support tools that pull in a variety of metrics.

The influx of AI in medical devices has raised questions from lawmakers and patient safety groups. Medical device industry group Advamed responded to a Congressional request for information on AI in healthcare in May. Advamed said the FDA’s authority is “flexible and robust enough” for AI/ML in medical devices.

On the other hand, patient safety nonprofit ECRI listed insufficient governance of AI in medical technologies among its top health technology hazards for 2024. CEO Marcus Schabacker said AI has the potential to do good, but the technology also can do harm if it provides inaccurate results or magnifies existing inequalities.

MedTech Dive spoke with Schabacker about what developers, regulators and hospital administrators can do to ensure devices that use AI are safe and effective.

This interview has been edited for length and clarity.

MEDTECH DIVE: The FDA finalized guidance in late 2022 on clinical decision support tools, and which ones should be regulated as medical devices. What are your thoughts on that?

MARCUS SCHABACKER: We think the FDA is taking a much too laissez faire approach here. They should be much more stringent, particularly with decision support tools, and have clear regulations.

If they don't want to regulate it upfront, they need to put post-market surveillance programs in place at a minimum. But we believe they should be way more forceful in the regulatory pathway and get more evidence from the companies that their tools are truly supportive instead of influencing decisions in a more severe way.

The FDA’s always in a tough spot. On the one hand, they want to make sure that the population stays safe. That's their mission. That’s our mission too. On the other hand, they want to make sure appropriate innovation can happen and don’t want to be seen as a blocker.

What prompted ECRI to list insufficient governance of AI in medical technologies as a top concern for 2024?

We’re concerned from three areas: One is development. Typically, it’s not medical people who developed the actual tool. It’s IT or software developers, who might or might not understand the work environment in which the tool will be used and rely on limited input from medical or clinical people. Therefore, the application of that might or might not be appropriate when it’s then put into actual clinical use.

Two, AI is a self-learning tool. It will be trained on a subset of people. If that subset is skewed or biased, then what the algorithm spits out will be skewed or biased.

We have made great strides, for example, when we design clinical trials to make sure that there’s a representative population in the testing of innovations from a gender, race and age perspective.

While this is still not a slam dunk, at least we are aware of it now and taking care of it in clinical trials. I don’t think that is to the same extent happening in AI development. That concerns us greatly, and we’ve already seen that AI can be misused to exclude or disadvantage certain populations. For example, there was a report about a tool that favored the scheduling of people the AI expected to be able to pay, versus people who weren’t able to pay. Those biases can get aggravated and accelerated if a tool is not carefully developed.

The last aspect when we think about AI is around how it gets implemented. Is the user aware of it? Is the clinician aware of it? Does the hospital have good processes and procedures in place?

How are these AI/ML systems regulated as medical devices?

Some circumvent regulation by saying we’re a decision support tool, which doesn't require regulatory oversight. Tools that could reduce dozens of inputs or variables to a manageable number could be potentially helpful. But our fear is that quickly becomes a decision-making tool, where particularly less experienced clinicians would take what the tool suggests as a decision and act upon that.

We’ve seen some of that can be problematic. One of the sepsis tools wasn’t very good at predicting sepsis.

We think that all regulatory agencies — but particularly in the U.S. — are way behind the 8-ball. The U.K.’s Medicines and Healthcare products Regulatory Agency has a better approach. They have created a virtual sandbox for those AIs. They need to demonstrate that they’re safe and effective in that virtual sandbox.

The other point is, How do you then do postmarket surveillance on the safety and effectiveness of the use of AI? It’s going to be often difficult to find out if the AI contributed to an adverse event or if it was the human who did, or if it was the actual device. Often it’s not clear if AI is included in the device, so someone would not think about that necessarily. We’re thinking about if we can do something like a nutrition label for AI. If AI is somewhere in there: What is the AI? How was it developed? What’s the testing pool? So that users are more aware of the AI.

What is working about how the U.K. is approaching AI regulations?

Our understanding is that before the AI is actually allowed to be used in a clinical setting, there's a virtual simulation of the application. It needs to demonstrate that it’s effective and safe to use. That’s a pre-requirement for clinical approval.

Currently, the majority of AI tools in the U.S., if they actually go through registration at all, go through the 510(k) route. And the 510(k) route, as you know, is a very abbreviated process where you essentially just need to demonstrate that you are equivalent to a device that is already in existence. That has not proven to be a very effective safety tool.

How are hospitals approaching AI tools?

We know of one large system that seems to have a relatively robust approach to this. And so we applaud that. The majority of what we see out there — it’s hard to grasp for an administrator. Often these companies made great promises in terms of cost savings, revenue acceleration, or patient safety. To me as an administrator, that sounds great. Sure, why wouldn’t I do that? And not necessarily understanding that a lot of these tools do not undergo any rigorous evaluation process upfront.

There needs to be a way more thoughtful approach from administrators in saying, “AI is here. AI will stay. AI can be helpful. What do we need to do to make it safe?”

In light of the lack of regulatory oversight and the enormous interest from a commercial perspective, and the increasing capabilities, it behooves administrators to take a more risk-averse approach.