More than 40% of all artificial intelligence tools cleared or approved by the Food and Drug Administration lack clinical evidence, according to an article recently published in Nature Medicine.

Researchers at the University of North Carolina School of Medicine found that 226 AI-enabled medical devices authorized by the FDA between 1995 and 2022 were missing clinical validation data. The figure represents about 43% of all 521 devices the agency authorized during this period.

“The FDA and medical AI developers should publish more clinical validation data and prioritize prospective studies for device validation,” the study authors wrote in the Aug. 26 article.

Most AI medical devices have been cleared through the FDA’s 510(k) process, a less rigorous regulatory pathway where manufacturers must demonstrate their products are safe and effective compared to a predicate device.

“A lot of those technologies legally did not need [validation data], but the argument we made in the paper is it could potentially slow adoption if we’re not seeing evidence of clinical validation, even if the device is substantially equivalent to a predicate,” Sammy Chouffani El Fassi, the study’s first author, said in an interview.

Additionally, small changes to an algorithm “can really change the effect” of a device after it is implemented, meaning the best way to know how a device will work is to test it in a clinical setting, said Chouffani El Fassi, an M.D. candidate at UNC.

The researchers categorized AI medical devices based on whether they had any clinical validation, meaning they were tested on real patient data for safety and effectiveness. In cases where validation data was available, they also tracked what type of study was done.

Of the 292 devices with validation data, 144 were studied retrospectively, which the researchers defined as testing before the devices were implemented in patient care or using data from before the start of a study. The other 148 were validated prospectively, tested in patient care or with data collected after the start of a trial.

Of the devices tested prospectively, just 22 underwent randomized controlled trials.

Value of prospective studies

Chouffani El Fassi said prospective studies can provide a better understanding of how a device works in real life. The researcher was part of a team at Duke University validating an algorithm that detects cardiac decompensation using electronic health record data. The team, which included the Duke Heart Center and Duke Institute for Health Innovation, validated the algorithm with a retrospective study. They also ran a prospective study where cardiologists used the algorithm and noted whether they agreed with its diagnosis of cardiac decompensation in patients.

“Prospective validation was particularly helpful because we could see what needed to be improved in the device,” Chouffani El Fassi said, adding that the group learned the user interface needed to be improved so the device was easier to use and more efficient.

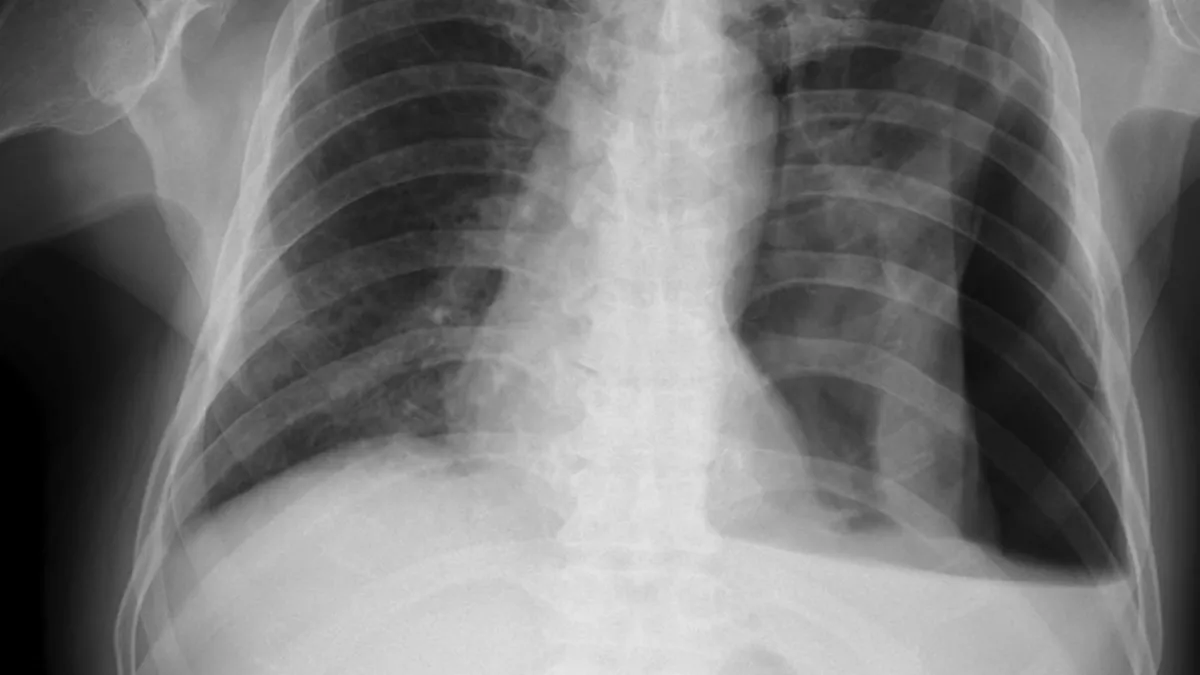

Testing an algorithm prospectively can also reveal potential confounding variables. Chouffani El Fassi provided the hypothetical example of a device that reads chest X-rays: After the start of the COVID-19 pandemic, some people’s chest X-rays would start to appear different. Testing the device on pre-pandemic data wouldn’t reveal that new information.

Prospective studies don’t have to be complicated or burdensome, Chouffani El Fassi added. For example, it could be as simple as a physician using an ultrasound probe optimized with AI technology on a patient, and rating on a scale of 1 to 5 how useful the tool was in clinical practice.

“It’s really basic, but we would consider that a prospective study because the confounding variables are there. Let’s say a doctor has a hard time using it because it has a bad user interface; he or she is going to give it a 1 out of 5,” Chouffani El Fassi said. “You learned something new; you saw how that device actually works in real life. We see that as the most valuable kind of data.”