After years of complaints that cybersecurity wasn’t being taken seriously by device makers, hospitals and regulators, Congress has now given the Food and Drug Administration clear oversight over the cybersecurity of medical devices.

Amid heightened fears of both hacks and regulation, medical device companies are taking security into consideration earlier in the process, says Naomi Schwartz, who joined San Diego, Calif.-based medtech security firm MedCrypt as senior director of cybersecurity quality and safety last summer.

Before joining the company, Schwartz worked as a premarket reviewer and consumer safety officer for the FDA’s Office of In Vitro Diagnostics and Radiological Health, where she approved the software and cybersecurity for the first automated insulin dosing system.

Schwartz spoke with MedTech Dive’s Elise Reuter about the FDA’s approach to cybersecurity and how to manage the tension between patients wanting access to their data and device companies looking to minimize liability.

This interview has been edited for length and clarity.

MEDTECH DIVE: How did you get involved with cybersecurity for diabetes devices?

SCHWARTZ: After 14 years of defense contracting, where I built radars and electronic warfare systems to challenge some radars, [I spent] 6 1/2 years at FDA reviewing diabetes devices. I got heavily involved in software interoperability, cybersecurity, wireless coexistence with [electromagnetic compatibility] for the devices, because those just happened to all fit within my skill set, and FDA doesn't have a lot of people who do those things.

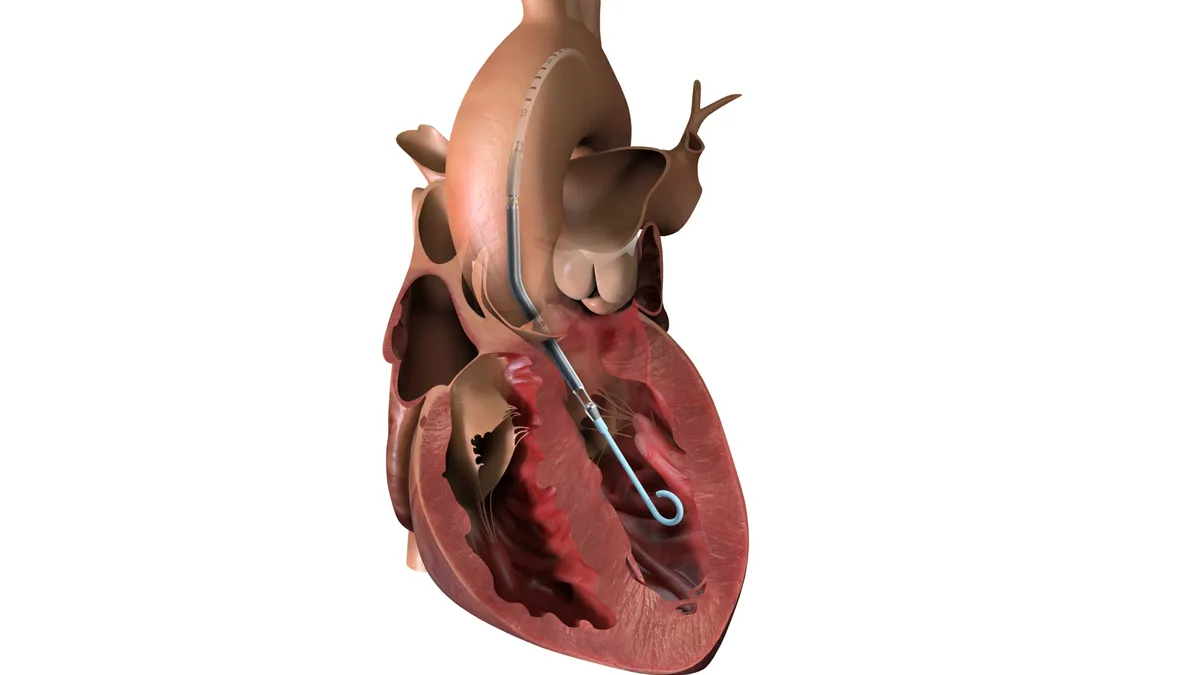

It was the perfect timing because FDA was just starting to review the first automated insulin dosing system — the Medtronic 670G — and it's a super high priority for the center to get devices like that onto the market in the U.S. There has been a long collaboration between the center and various manufacturers and they really wanted to see a change for the patient community.

It all came together within that 6 1/2-year period where we had the ability to reclassify some CGMs to create a Class II [medium risk] pathway, and then a Class II for pumps that connect to them, and a Class II pathway for algorithms that can run on the pumps or on a separate controller, that could then control these pumps to dose based on the information from these CGMs. So this huge interoperability thing all happened at once, and I landed right in the middle of it.

What was it like working on the first automated insulin dosing system? What considerations went into that?

Getting the first one approved, that was a [premarket approval], and all of the components of that are still in Class III [the highest risk], for a variety of reasons. Getting through that first review was very rapid fire because the Center had such a high priority on reviewing it; they wanted it reviewed in a short timeline, fully interactively. That was a pretty wild ride.

The clinical consultants, the medical officer who reviewed it, sat just a few doors down from me. We spent so much time in each other's offices just sitting there and walking through stuff. I was learning all about diabetes devices for the first time, and I was having lots of questions: do these safety guardrails in the software design make sense, do these software requirements make sense?

We had long conversations about a list of unresolved software anomalies, some of which proved unacceptable and had to be resolved before they could go to market. So that was very intense.

Then, we had some time to think about what we would do as we got to know that device better and as we saw it in the post market.

At that point, there were also standalone CGMs from Dexcom and Abbott, in addition to the Medtronic CGMs that were used in their AID [automated insulin delivery] system, and each of those was its own little Class III device. Some of them connected to other things like insulin pumps, which would shut off dosing if they saw a low CGM reading and things like that. What do we know about the way these perform and where they don't perform as well? And how would we write special controls that require a certain threshold for performance for these things to be standalone devices, and Class II with special controls that would make it easier for these things to communicate with other devices, with less bureaucracy and paperwork and headache for manufacturers and for FDA.

Every time there's a change in a Class III device, every single time, it has to be reviewed. For a Class II device that's not true; there are different thresholds. FDA was excited at the thought that we could review this faster, turnaround faster, it would be cheaper, and more manufacturers might get into the space that way.

From your perspective, what are the most important security considerations for these devices?

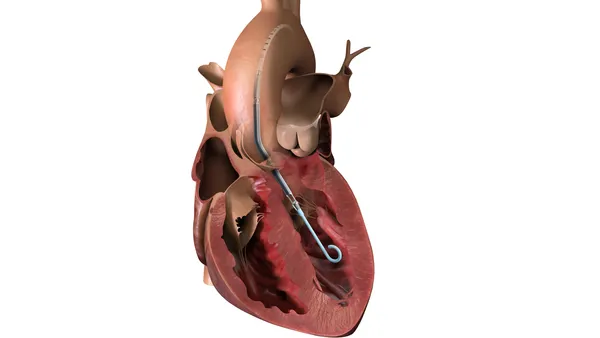

So the first security concern that came to light were people are hacking these devices right now. How is that possible? It didn't take much investigating to learn some of the older insulin pumps on the market were transmitting their data to other devices that they communicated with in plain text, which means that I, as an electronic warfare person, could have pulled out some of my lab equipment, just sat there with an antenna and listened to every command and every data packet being transferred between these two components, and been able to demodulate them and figure out exactly what was being sent by whom, at what time and I can then inject my own commands in the middle, and there was no way to know it was coming from me and not coming from the legitimate device. That's super, super not secure and not safe.

We knew that people were hacking with their own insulin pumps. And it works for these people and it's probably been saving lives. But it'd be really preferable if there were a safer, more secure mechanism for them to do all of that without hacking it themselves.

So FDA is exercising enforcement discretion. They don't want to take these tools away from people, but they really would prefer to see people using cleared or approved devices. And so there's this fine line FDA is walking.

Medtronic had a recall of those older insulin pumps [that people are using], but there was no requirement that every patient who has one has to send it back. There's no way to enforce that.

I’ve seen several comments from people who are concerned about losing access to these devices. Is there a solution?

A lot of those patients have asked FDA repeatedly [to] tell these companies that they have to free our data for us to use. FDA can't do that. That's not their authority to say, “Dexcom, you must make the data accessible in real time.” There might be reasons why, say Dexcom, doesn't want to do that. There may be liability issues with liberating this data for individual users where the individual users may use that data in a way that isn't in the indications for use.

These companies don't want to turn off patients who rely on their devices. They also don't want to be liable for people doing stuff that is not validated. It's a tough line to tread.

At the end of the year, Congress gave the FDA power to establish cybersecurity standards for medical devices. What’s the impact here?

The appropriations bill, which passed in December, includes Section 3305, which really gets to the heart of legal authority to ensure cybersecurity in medical devices. It’s an amendment to the Food, Drug and Cosmetic Act, which is a very different thing than: “Here's something I recommend strongly and if you don't follow it, we're going to hit you with a quality system deficiency in your premarket submission.”

The [FDA’s cybersecurity] guidance will be updated in a little less than 180 days now, and should clarify what exactly they mean by cyber device. The legislation, in particular, they're going to have to clarify what it means to have the ability to connect to the internet.

There's some questions in there. But what it means is that FDA can now point to a change in the act that says, we will ask you about this and you must tell us what you've done. And if we don't think it's good enough, we can find something “not substantially equivalent” [to a previously approved device], or not approvable [for a Pre-Market Approval]. That's a big deal.

You said you’re currently working with six different diabetes companies on cybersecurity. Can you name them?

We don't want to confirm which specific companies we're working with. What I can say: Some of these are well- established companies and some of them are not. Some of them are innovators coming into the space. And it's super important for them to be taking the approach they are. To come find a specialist who can help them right-size security.

By right size, I don't mean, what's the bare minimum to get past FDA. It's what's the right kind of security given the way your device is designed. Because not all CGMs do the same thing. Some of them may have more risks than others, some may be intended for people with Type 2 diabetes who have less variability. There you have a little bit less concern about the real-time nature of the data. There are all sorts of little catches in there. And when they come to MedCrypt, they're saying we want to be proactive about this, we want to build it in. We don't want to get caught at the end of our design cycle trying to submit it to FDA and get asked tough questions that require a redesign that takes a long time to fix.

It's super exciting to see the way that manufacturers over the last year and a half have changed their perception from, “Oh, we gotta go do that,” to “hey let's get this stuff dealt with early, so that we can have confidence.”