Dive Brief:

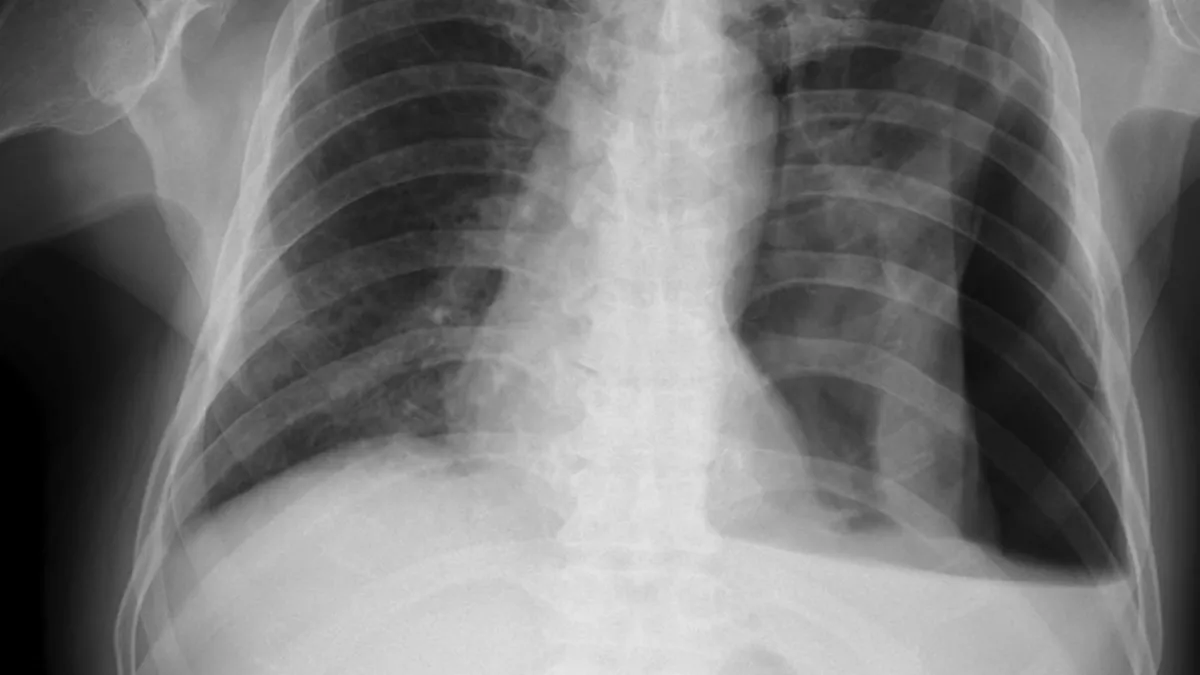

- Chest radiograph reports created by a generative artificial intelligence model matched the quality and accuracy of reports by in-house radiologists in a retrospective study.

- The study, which was published in JAMA Network Open, applied the AI to 500 chest radiographs taken in an emergency department. The clinical quality and textual accuracy of the reports was similar to reports by in-house radiologists and better than reports from a teleradiology service.

- Based on the findings, the Northwestern University researchers who wrote the paper concluded the model could “enable timely alerts to life-threatening pathology while aiding imaging interpretation and documentation.”

Dive Insight:

Physicians in emergency departments sometimes need to interpret diagnostic images themselves rather than wait until a radiologist is available, notably when patients come in overnight and need treatment quickly, but no specialist is available until morning. A previous study found that, while physicians typically give accurate interpretations of images, “rare though significant discrepancies” happen.

Teleradiology services can cover gaps in the availability of in-house radiologists, but the emergence of generative AI models capable of interpreting images and writing reports based on them suggests there may be a technological solution.

To evaluate that idea, the researchers developed an AI model and tested it on 500 chest radiographs taken at the Northwestern emergency department. Both teleradiology and final radiologist reports were available for all the images. No images were from patients younger than 18 years or older than 89 years.

Six practicing board-certified emergency medicine physicians viewed the images and rated the reports in terms of quality and clinical accuracy. There was no significant difference between the ratings of the AI and in-house radiologists, which respectively scored 3.22 and 3.34 on a five-point scale. Most of the reports scored five, meaning no changes were needed.

“As AI reports can be generated within seconds of radiograph acquisition, real-time review could notify physicians of potential abnormalities, aiding in triage and flagging critical findings requiring early intervention. The results of the current study suggest that the AI model was similarly proficient in identifying these clinical abnormalities as a radiologist,” the authors wrote.

AI produced more reports that the raters disagreed with, compared to the in-house radiologists, but the level of disagreement with critical findings was low. The teleradiology service delivered more reports that required minor changes in wording or style than the AI and in-house radiologists, causing it to have a significantly lower rating. As the researchers note, a prospective study is needed to validate the AI.