Dive Brief

- Some types of risk-scoring tools would be regulated as medical devices, according to a final guidance issued by the Food and Drug Administration on Sept. 28.

- Many of these software tools have been exempted from FDA regulation under the 21st Century Cures Act, as long as the healthcare provider can independently review the basis of the recommendations and doesn’t rely on it to make a diagnostic or treatment decision.

- Clarity on the rules was welcomed by some device makers, even as they cautioned that products may take longer to come to market. Some medical device companies supported the changes, saying they provided more clarity on the FDA’s thinking, while others saw the guidance as overstepping Congress’s intent.

Dive Insight

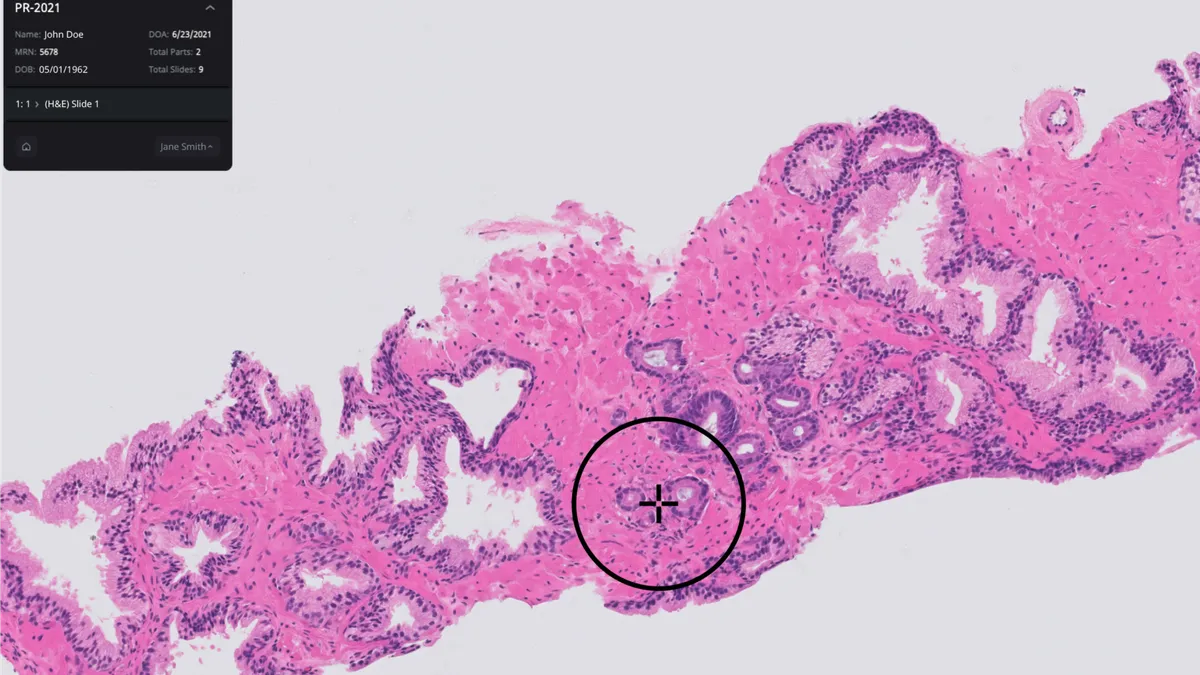

The guidance applies to clinical decision support software, a broad category of software tools that can help physicians and other healthcare providers detect illnesses and generate alerts when a patient’s condition changes. For example, software that uses electrocardiogram (ECG) data to detect arrhythmias, like that used by Apple’s and Fitbit’s smartwatches, is regulated as a medical device. The same goes for software that analyzes images to differentiate between ischemic and hemorrhagic stroke, or that is used by radiologists to “triage” patients and review potential cases of pulmonary embolism.

“The 21st Century Cures Act was very clear that anything that analyzes a medical image directly would be a medical device,” said Christina Silcox, research director for digital health at Duke-Margolis Center for Health Policy.

The biggest policy change, the FDA noted in the guidance, is that some types of predictive tools, which previously fell into a gray area, should now be regulated as medical devices. The agency gave an example of software that analyzes patient information to detect stroke or sepsis, and generates an alarm to notify a healthcare provider.

Sepsis Detection

Several such risk-scoring tools are already in use: Electronic health record companies Epic Systems and Cerner have both made sepsis surveillance tools to detect the deadly condition before patients deteriorate. Neither are FDA cleared, and a study last year by Michigan Medicine found that Epic’s tool performed worse than advertised.

“I think that there is a distinction in people's minds around prediction and diagnosis. And you saw this with sepsis, where we’re not saying they have sepsis now, we're predicting they're going to have sepsis,” Silcox said. “In some ways, I think FDA said a risk score, the prediction, it's still a device. That was a space where people weren't quite sure.”

Bradley Merrill Thompson, an attorney with Epstein Becker Green in Washington, D.C., who advises clients on FDA regulations, wrote that part of the FDA’s argument is around automation bias, or the concern that healthcare providers might rely too much on an automated suggestion.

“FDA is desperately trying to draw a distinction between software that is too self-confident in its recommendations (Dr., the diagnosis is X), versus software that is more tentative in its recommendation, (Dr., the diagnosis is probably X, but might also be something else.) But that whole line of argument is a new addition from the draft guidance,” he wrote in an email. “Apparently, FDA has a very low view of healthcare professionals if the agency thinks that mere software can ‘direct’ doctors what to do, as if the doctor then has no choice.”

For patients, these tools are often used without their knowledge, and there’s nothing in the guidance that suggests they would have to be notified, Silcox said. But they could still benefit from the protection that someone has looked over the software tools to ensure they’re going to work, she said.

“There are always trade-offs, but in my view this guidance could provide a lot of benefit to patients,” Kellie Owens, an assistant professor of medical ethics at NYU’s Grossman School of Medicine, wrote in an email. “It could help protect patients from living under the jurisdiction of clinical decision support software that doesn't work or that systematically prevents some groups from receiving the care they deserve.”

The guidance comes amid a broader discussion about how bias in medical devices can affect patient care. Multiple recent studies have pointed to evidence that pulse oximeters overestimate blood oxygen levels in patients with darker skin. Researchers at Sutter Health recently found the inaccuracy led to hours of delay in Black patients with COVID-19 receiving supplemental oxygen treatment.

What it means for software developers

Epic Systems declined to comment on the guidance. Suchi Saria, an associate professor at Johns Hopkins and CEO of Bayesian Health, a startup with a sepsis alert platform, supported the changes.

The existing lack of clarity on how to assess the quality of software solutions, makes it hard for health systems or provider groups to confidently adopt these kinds of tools, Saria said. They’ve had to turn to published and peer-reviewed research, which isn’t available for all of the sepsis alert tools on the market.

“Traditionally, when it comes to drugs and devices, organizations have relied on groups like the FDA to validate, evaluate and endorse certain products. In that sense, I think it's very exciting that there will be more clarity now through organizations like the FDA in evaluating these kinds of products,” Saria said.

She cautioned that FDA review of these software products could slow down the ability to bring them to market, given the many processes involved in evaluating machine learning solutions for safety and quality.

Despite these concerns, Saria expects a benefit to her company overall.

“[The] FDA coming in and wanting to play a bigger role in oversight actually only helps Bayesian's case because it allows us to accelerate that agenda,” she said. “We think there's so much noise in the market and it's hurting credible solutions from getting implemented faster.”

Mayo Clinic, which is developing a suite of ECG algorithms with spinout company Anumana, said the examples in the guidance were helpful in evaluating how the Act applies to software products.

“Overall, the FDA regulatory policy for clinical decision support software uses is much the same as its draft guidance, and is consistent with the FDA’s risk-based approach for medical device regulations, more broadly,” wrote Colin Rom, who leads science and innovation policy for Mayo Clinic’s government engagement team. “Mayo Clinic believes that these policies and an approach based on risks will enable the development and deployment of safe, effective and ethical digital health technologies for our patients.”

Enforcement

There are still plenty of details to be hammered out, including how the changes would be enforced for software tools that are now considered a device.

“I think the guidance provides helpful clarification of the agency's intentions to regulate some clinical decision support tools, but also leaves a lot of questions about what that regulation will ultimately entail,” NYU’s Owens wrote.

An FDA spokesperson wrote in an email that the agency has “discretion on when to take appropriate risk-based actions, as facilitated by the publicly-available guidance. Guidances describe the agency’s current thinking on a topic and should be viewed as recommendations, unless specific regulatory or statutory requirements are cited.”

The agency added that developers are encouraged to work with the FDA as early as possible in the process to get answers to their questions, through the Center for Devices and Radiological Health’s pre-submission program.

“I absolutely believe that we should take [the FDA] literally, and that they would apply this guidance to regulate a lot of software which is presently in the marketplace but for which the developers have not sought to comply with FDA requirements,” Thompson wrote.

Whether the agency would allow risk-scoring tools developed and used by hospitals internally wasn’t addressed in the guidance, but the FDA has a long-standing policy of allowing for the practice of medicine, Thompson added. Vendors that develop products for sale to multiple institutions would need to comply, he said.