Leveraging real-world evidence in regulatory decision-making for medical devices has been on FDA's agenda for years. It took the pandemic to speed efforts to incorporate real-world data in evaluating COVID-19 diagnostics and antibody tests.

Now, the challenge is culling through less-than-perfect data to make those decisions, a panel of FDA and industry officials said at AdvaMed's annual conference last week.

"That's the million-dollar question that we're trying to solve," said Sandi Siami, senior vice president at the Medical Device Innovation Consortium (MDIC), who heads the efforts of the National Evaluation System for health Technology Coordinating Center, or NESTcc.

By using real-world data, there is an inherent "level of missingness" in the clinical evidence regarding the usage, benefits and risks of a medical product, according to Siami.

FDA in 2016 awarded $3 million to MDIC to establish NESTcc, which has worked with medtechs including Abbott and Johnson & Johnson to evaluate the use of RWE in the regulatory process. The center was created to integrate data from a myriad of sources to provide more comprehensive evidence of medical device safety and effectiveness.

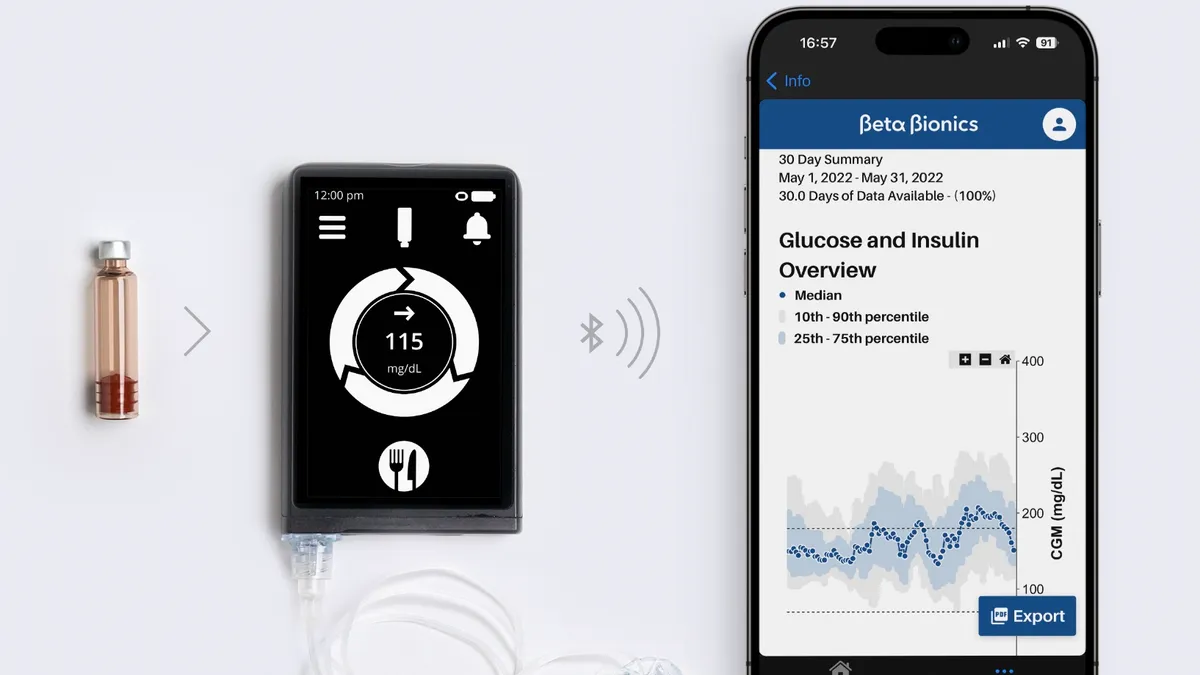

In a nutshell, RWE is the clinical evidence regarding the usage, benefits and risks of a medical product based on the analysis of RWD. Common sources include EHRs, claims data, registries, patient-generated health data, as well as wearables and mobile apps.

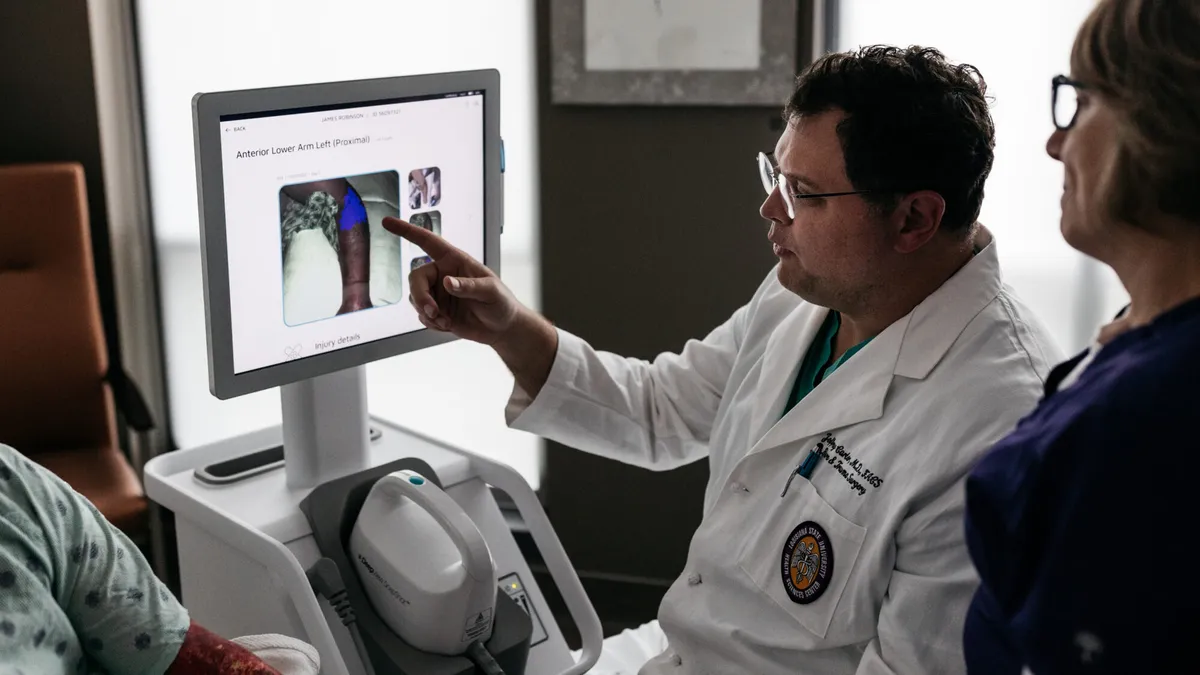

FDA recently made the case that RWE provides a "broader and richer set of safety information" to better inform its oversight of the total product lifecycle, including premarket submissions and postmarket surveillance, by offering real-life clinical performance in a way that controlled clinical trials cannot.

Still, Wendy Rubinstein, director of personalized medicine at FDA's Center for Devices and Radiological Health, told the panel during AdvaMed's annual Medtech Conference that all data is not created equal.

Although FDA says it does not endorse one type of RWD over another, Rubinstein said one difficulty in using data from wearables and health apps, for example, is that "they're not collected necessarily for the purpose of supporting RWE-related applications."

CDRH in August 2017 issued guidance to clarify how FDA evaluates RWD to determine whether they are sufficient for generating the types of RWE the agency needs to determine the safety and effectiveness of a device. If real-world data are "reliable and relevant" to the regulatory question at hand, the agency has said that RWD may be considered valid scientific evidence supporting both premarket and postmarket regulatory decisions.

But validating the data from disparate sources remains a formidable challenge.

Heather Colvin, director of evidence and outcomes policy for medical devices at Johnson & Johnson, said RWD is "not just about clinical care" as the definition is broad enough to "allow us to look at new sources of data that are being generated ... and start thinking in new and innovative ways about how we collect data and engage patients."

The growing availability of RWD sources have increased the potential to generate more robust RWE to support FDA decisions.

"As FDA continues to advocate for better access to real-world evidence, we are seeing these new data streams becoming available as well as more examples of use cases where such data is being used for a variety of decisions," said Diane Wurzburger, GE Healthcare's executive for regulatory affairs, developed markets and global strategic policy.

FDA in March published an analysis of 90 examples of RWE used to support decisions, with medical device makers such as Edwards Lifesciences, Intuitive Surgical and Medtronic cited. Of those 90 examples provided by the agency, just three involved digital health devices and only two featured patient-generated data, while most of the other examples featured registries or medical records.

Nonetheless, Siami said RWE sources can be quite broad.

"We're talking about genomics potentially too and really going beyond what we normally use or think about data," Siami said. "The analytical output becomes the clinical evidence that is then used to determine the safety, effectiveness or performance, in this case, of medical devices."

One way NESTcc is trying to address this challenge is through standardization of the data collection process for RWD that meets robust methodological standards, Siami said.

Grappling with the data

Validating real-world data remains a formidable challenge to generating the kind of robust RWE needed to support FDA decisions. Adding to the complexity is that there are many other important stakeholders.

"It's also about the data providers, the sources of data, that have to be on board. We're talking health systems, academic medical centers, payers and patients who generate the data," NESTcc's Siami said.

FDA in its 2017 guidance warned data collected during clinical care or in the home environment may not have the same quality controls as data collected within a controlled clinical trial setting.

"Certain sources of RWD, such as some administrative and healthcare claims databases or EHRs, may not have established data quality control processes," it reads.

Danelle Miller, vice president of global regulatory policy and intelligence for Roche Diagnostics, said "it's not always clear" in an EHR "what patient got what IVD with what result — getting all of that information is a real challenge."

J&J's Colvin noted that among the insights learned from the NESTcc test cases is variability not just in the different RWD datasets but across health systems.

"While standardization would be great, we aren't able to standardize the healthcare delivery system writ large," Colvin remarked. "We need to find ways to look at how we understand the individual datasets and characterize them at their source, and then look for places where that curation process can give us some equivalence."

NESTcc's Siami concluded that the difficult challenge of data curation, the organization and integration of data collected from various sources, is a continual process with high stakes for the FDA's real-world evidence initiative.

"These are such important issues. If we don't solve this, we can't effectively use real-world data for that evidence," Siami warned.