Dive Brief:

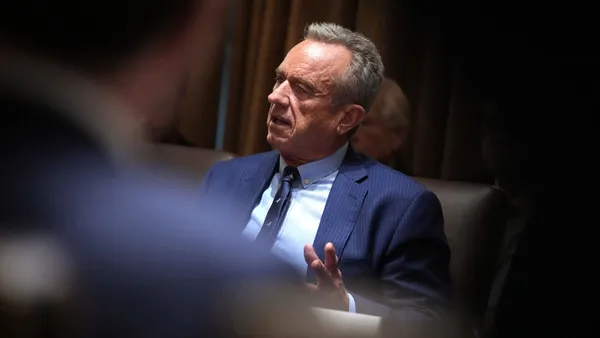

- Scott Gottlieb, the Food and Drug Administration commissioner during the first Trump administration, called for the agency to return to older policies on artificial intelligence in a JAMA article published last week.

- Gottlieb, who led the FDA from 2017 to 2019, wrote that “recent changes to policies related to the regulation of AI have added new uncertainties.” Gottlieb is now a senior fellow at conservative think tank the American Enterprise Institute, a partner at venture capital firm New Enterprise Associates and a board member of Illumina and Tempus AI.

- Specifically, Gottlieb said the FDA should revert to an older interpretation of the 21st Century Cures Act that would exempt more types of clinical decision support software from the agency’s premarket review process.

Dive Insight:

The beginning of the second Trump administration has brought questions about how the FDA will regulate AI in medical devices. A permanent FDA commissioner has not yet been appointed, and nominee Martin Makary is still awaiting a confirmation hearing. Troy Tazbaz, who led the agency’s Digital Health Center of Excellence, which set a framework for the device center’s approach to regulating AI, resigned on Jan. 31.

In his first week, President Donald Trump signed a broad executive order calling for agencies to revoke AI policies “that act as barriers to American AI innovation.” Experts interviewed by MedTech Dive said the Trump administration could move to roll back controversial policies during the Biden administration, such as the clinical decision support final guidance referenced in Gottlieb’s JAMA article.

The FDA issued the final guidance in 2022, bringing several risk-scoring tools, such as software to predict the risk of sepsis or stroke, under the agency’s oversight. Previously, these tools were exempt from premarket review, as long as clinicians could independently review the basis of the recommendations and didn’t rely on them to make diagnostic or treatment decisions.

The guidance addressed concerns around automation bias and situations where clinical decision support software might be used in time-sensitive situations. It also considered software that integrates data from multiple sources, such as imaging, consultations and laboratory results, as medical devices.

The FDA finalized the guidance following scrutiny of a sepsis risk-scoring tool made by electronic medical record software company Epic, with a study finding the tool poorly predicted sepsis despite its widespread adoption.

Gottlieb, in the article, raised concerns that as a result of the final guidance, “many EMR developers intentionally limited their software’s features to avoid incorporating analytical tools that would trigger costly and uncertain regulation.”

Outside developers have built these functions as stand-alone tools that can be independently regulated by the FDA and integrated into EMRs as modules, Gottlieb said, adding that it may be difficult to develop and purchase these tools as distinct modules “without limiting their inherent utility.”

Gottlieb advised moving back to an earlier approach to clinical decision support tools in line with the 21st Century Cures Act and FDA policies during his term as commissioner.

“If these AI tools are designed to augment the information available to clinicians and do not provide autonomous diagnoses or treatment decisions,” Gottlieb said, “they should not be subjected to premarket review.”