Dive Brief:

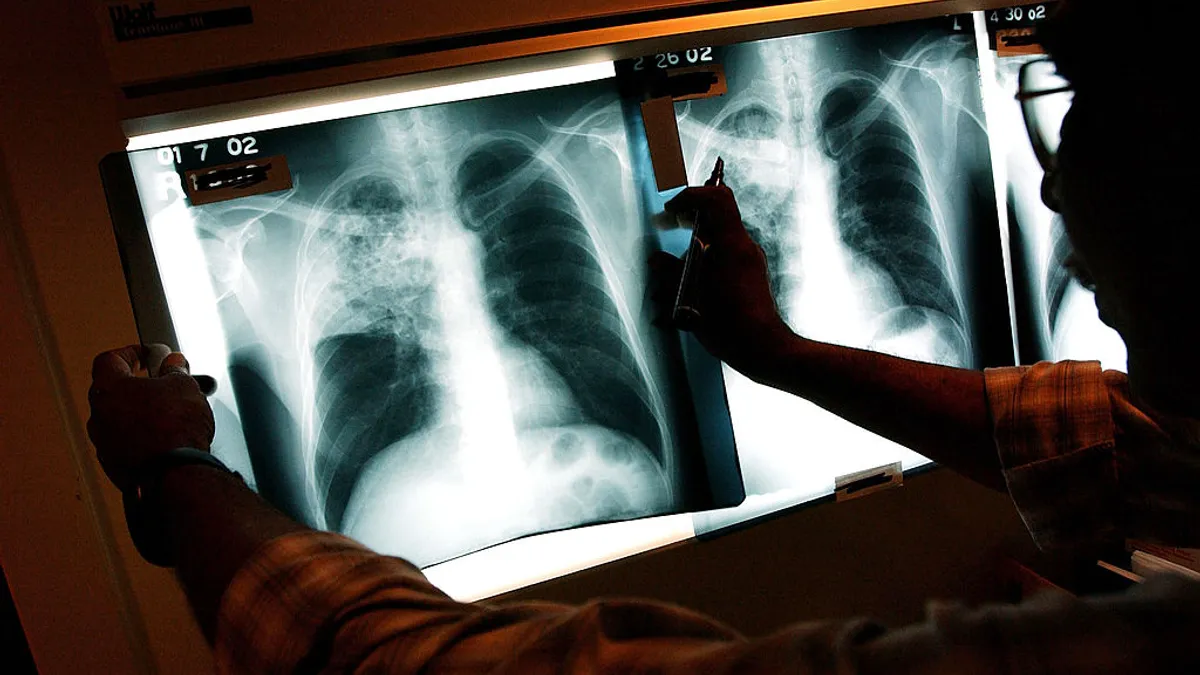

- A recent study showed that a version of ChatGPT analyzes medical images at an expert level but frequently reached the right answer with incorrect reasoning.

- The results, which were published Tuesday in the peer-reviewed journal npj Digital Medicine, show OpenAI’s artificial intelligence GPT-4 with vision is as good at answering multiple-choice questions about medical images as physicians who lack access to external resources.

- However, the model made mistakes in image comprehension, while still reaching the right answer, 27% of the time. The researchers said the errors show the need for further study before the AI models are integrated into clinical workflows.

Dive Insight:

GPT-4 with vision, called GPT-4V, is a version of the ChatGPT large language model that can analyze images and text at the same time. Pilot medical studies found the model outperformed medical students and physicians in closed-book settings. However, those studies looked at the answers provided by the model without assessing how it reached its conclusions, according to the new research.

To understand the rationale for the model’s answers, scientists at the National Institutes of Health asked a senior medical student, GPT-4V and nine physicians from different specialties to answer 207 multiple-choice questions. The prompt to GPT-4V specified that the model should include a description of the image, a summary of relevant medical knowledge and step-by-step reasoning for the answer.

Physicians without access to external tools, such as a literature search engine, correctly answered 77.8% of the questions. GPT-4V was right 81.6% of the time. The researchers noted that the difference between the accuracy of the model and the physicians was not statistically significant.

The physicians performed better when they had access to external resources, giving the correct answer to 95.2% of the questions. The human advantage over the model was most pronounced in the one-third of questions that the researchers categorized as hard.

“Integration of AI into health care holds great promise as a tool to help medical professionals diagnose patients faster, allowing them to start treatment sooner,” Stephen Sherry, acting director of NIH’s National Library of Medicine, said in a statement. “However, as this study shows, AI is not advanced enough yet to replace human experience, which is crucial for accurate diagnosis.”

Physicians failed to answer 46 questions in the closed-book setting and 10 questions in the open-book setting. GPT-4V correctly answered at least 70% of questions that physicians got wrong in both settings.

“This suggests that GPT-4V holds potential in decision support for physicians,” the researchers said. The value of the model in decision support is shown by a question that all human groups answered incorrectly, “but GPT-4V successfully deduced tongue ulceration as a rare complication in the context of other manifestations of giant cell arteritis,” the authors said.

Still, the rationales provided by the model raised concerns. In one response, the model correctly identified malignant syphilis but failed to recognize that two skin lesions arose from the same pathology. That was one of many image comprehension errors.

Mistakes in knowledge recall and reasoning were rarer, each happening around 10% of the time. The model could be “logically incomplete while guessing right,” the researchers said, and gave answers that “showed the incompetence of GPT-4V in distinguishing similar manifestations of medical conditions.”

“Understanding the risks and limitations of this technology is essential to harnessing its potential in medicine,” said Zhiyong Lu, NLM senior investigator and an author on the study.