LAS VEGAS — Executives from America’s largest technology companies descended upon the HLTH conference this year with promises to lead the next round of innovations in healthcare artificial intelligence.

AI products, offered by companies like Google, Microsoft, Amazon, GE Healthcare and Nvidia, vow to help systems solve a range of problems from reducing documentation time to optimizing operation room schedules. Tech executives say they’ve moved past earlier iterations of AI tools — which tended to be single point solutions — to offer platform solutions, which can be tailored to the needs of an individual health system.

“We are truly at an inflection point,” said Sally Frank, worldwide lead for health and life sciences at Microsoft.

But while tech companies look to sell their products to health systems and providers, executives are split on how to offer the new technology to the industry responsibly — and whether clinicians are equally ready for the new tools.

“Any evaluation that we do at the foundation model level is only a first draft,” said Greg Corrado, senior director at Google Research and co-founder of the Google Brain Team. “It does need to be pioneered by healthcare systems that are willing and able to do the research on the ground, and not every health system can do that.”

Tech companies emphasize a platform approach

Technology firms pitch themselves as partners for healthcare organizations in AI development, offering deep expertise in product development and testing.

However, tech executives at HLTH caveated that they rarely tell health systems how they should apply AI.

“Google isn't a healthcare provider, and we don't want to become a healthcare provider,” said Corrado.

The executive said use cases for AI should come directly from health systems. Technology companies are like semiconductor chip manufacturers — they build a resource intensive, valuable raw material — in this case, a large language model — and the healthcare industry applies it, Corrado said.

“The technologies that we develop should be things that allow healthcare organizations to conceive of and build their own futures in this space,” he said.

Google has worked with health systems, including the Cleveland Clinic and Community Health Systems, to pilot technology ranging from a healthcare-specific cloud services platform to a documentation tool that searches the electronic health record.

Other tech companies like Microsoft have rolled out similar partnerships that encourage health systems to tailor their own AI solutions.

It’s a promise that blows the door open past “out-of-the-box” tools solving “straightforward use cases,” said Kees Hertogh, vice president of health and life sciences product marketing at Microsoft.

In October, Microsoft said it would make it easier for health systems to build their own AI tools directly. The company is also leveraging generative AI to help health systems organize their unstructured data, as well as imaging and medical imaging data, to enable clients to “build their own co-pilots, their own AI agents,” said Hertogh.

Health systems are likely to prefer such tools above earlier offerings, according to Bill Fera, a principal consultant with Deloitte.

“I think hyperscalers and platform players are going to take over the space as people become more comfortable with doing their own builds and understanding how to do that,” Fera said in an interview. “There will be a move away from applications to our own platforms.”

A need for robust testing triggers questions about access

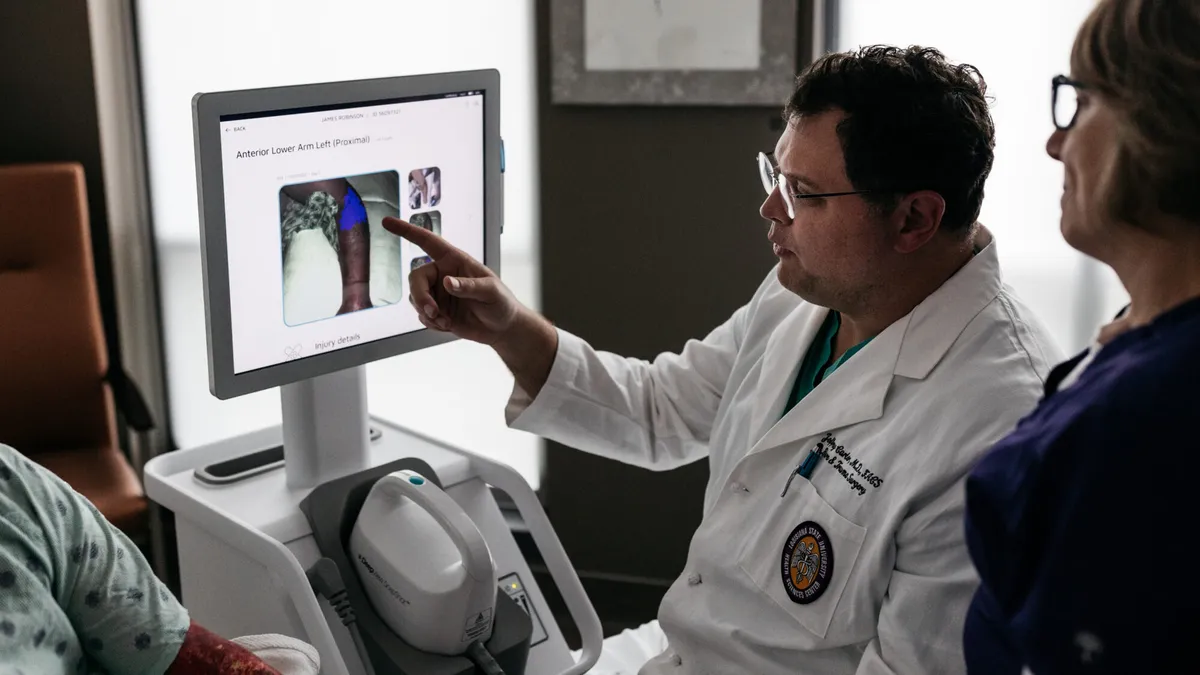

In healthcare, tech companies say they’re currently interested in helping health systems manage information overload. The companies’ ability to wrangle large amounts of data in written forms, as well as images, could help cut down the time it takes providers to review notes or schedule patients for critical operations, executives say.

But different AI applications require varying levels of human supervision.

For now, tech companies at HLTH agree all healthcare AI should have a human supervisor. Some companies have focused first on administrative-focused AI tools because they require less oversight. At GE Healthcare, for example, a scheduling tool might require less supervision than one used to assist in cancer treatment.

“These are the places where we think that we can bring AI in really, really fast because it's not directly tied to clinical decision support,” said Abu Mirza, general manager and global SVP of digital products at GE Healthcare. “It's not directly tied to making a decision about someone's surgery and other stuff. This is where AI can really get started.”

However, when AI is close to a clinical decision point there must be even more robust testing, said Google’s Corrado.

Significant attention has been paid to the problem of AI “hallucinations” — when a model produces an answer that can’t be backed up by source text.

But equal attention ought to be paid to omissions, Corrado argued. Omissions occur when AI fails to cite relevant information in its answer. In some ways, omissions are harder to test for because they require a full review of often lengthy medical records.

However, not every health system has the resources or the know-how to robustly test cutting-edge technology, according to Microsoft and Google executives.

Microsoft has connected health systems seeking to use AI, so that more advanced systems can provide smaller systems with resources and knowledge ahead of deployment, according to Hertogh. The consortium has over 15 hospitals and health systems, including Providence, Advocate Health, Boston Children’s Hospital, Cleveland Clinic, Mercy and Mount Sinai Health System.

Microsoft offers voluntary testing guidelines for health systems that use its AI products.

“We provide them with guidance and tech so you can create visibility and transparency into the system... The relationship is a bit of like, ‘Hey, this is how we're approaching building, this is how we're thinking about responsible AI,’” Hertogh said.

Microsoft also benefits from the information exchange, according to the executive. While Microsoft offers its take on how to implement AI, the exchange is a two-way street. Engaging with leaders at some of the nation’s best health systems helps promote trust in Microsoft’s offerings and establish credibility, he said.

This broad collaborative spirit is relatively new, according to Hertogh. He thinks it could help smaller providers access new tech and act as an “accelerant” for broader adoption of generative AI across health systems.

Google, however, doesn’t just suggest health systems test their AI — the company all but requires it.

Corrado said clinical feedback it integral to Google’s testing process, so to date, the company has only partnered with health systems that have some “sophistication” around AI and shared values about model testing.

“I think health systems that want to buy an off-the-shelf solution — they should probably wait. They should wait for things to mature, for things to stabilize,” the researcher said. “I don't think there are any evaluation frameworks that are sufficiently mature in any [healthcare] context where you can go and just deploy the tech. ... There's nothing that is that iron clad. You have to put it in the environment, evaluate how it's really going to be used on the ground and make adjustments there.”