Intuitive Surgical in July launched its first digital tool that harnesses artificial intelligence to enable surgeons to study their own procedure data and adapt approaches to achieve better results for patients. Called Case Insights, the technology works with data from Intuitive’s robots and hospitals to find correlations between surgical technique, patient populations and patient outcomes, and develop objective performance indicators.

Intuitive CEO Gary Guthart told investors in July that the company’s AI technologies can also help shorten surgeon training times, help hospitals improve surgical program efficiencies and ultimately reduce costs. The effort builds on several years of work with the robot maker’s clinical research partners.

MedTech Dive spoke with Intuitive’s Tony Jarc, senior director of digital solutions and machine learning, to learn about how the company is incorporating AI into robotic surgery.

This interview has been edited for length and clarity.

MEDTECH DIVE: What are some of the ways that Intuitive is employing artificial intelligence?

JARC: We have AI embedded in some of our instruments. An example [is] our stapler, where we can measure, thousands of times a second, certain things about how much the stapler is clamping down on tissue, [so] that we can do our best to ensure that a good staple fire occurs. And that hopefully would lead to better patient outcomes and trust reliability in an advanced instrument that’s critical for many of our advanced procedures.

We have a platform called Ion that is super exciting and allows for an interventional pulmonologist to be able to navigate to distal parts of the lung for lung biopsy. And we have AI technologies that segment imaging scans to be able to help create a path by which the catheter can get to those distal regions of the lung and then successfully cold biopsy, hopefully earlier on in stages of lung cancer, so that patients have a better chance of recovery.

We have 3D modeling for pre-op imaging for our da Vinci [robot] business. You can take CT scans and segment them into 3D models and use them for pre-op preparation to understand anatomical structures, so that when you go into surgery and start your operation as a surgeon, you may have a mental model of what to expect that is specific to that patient.

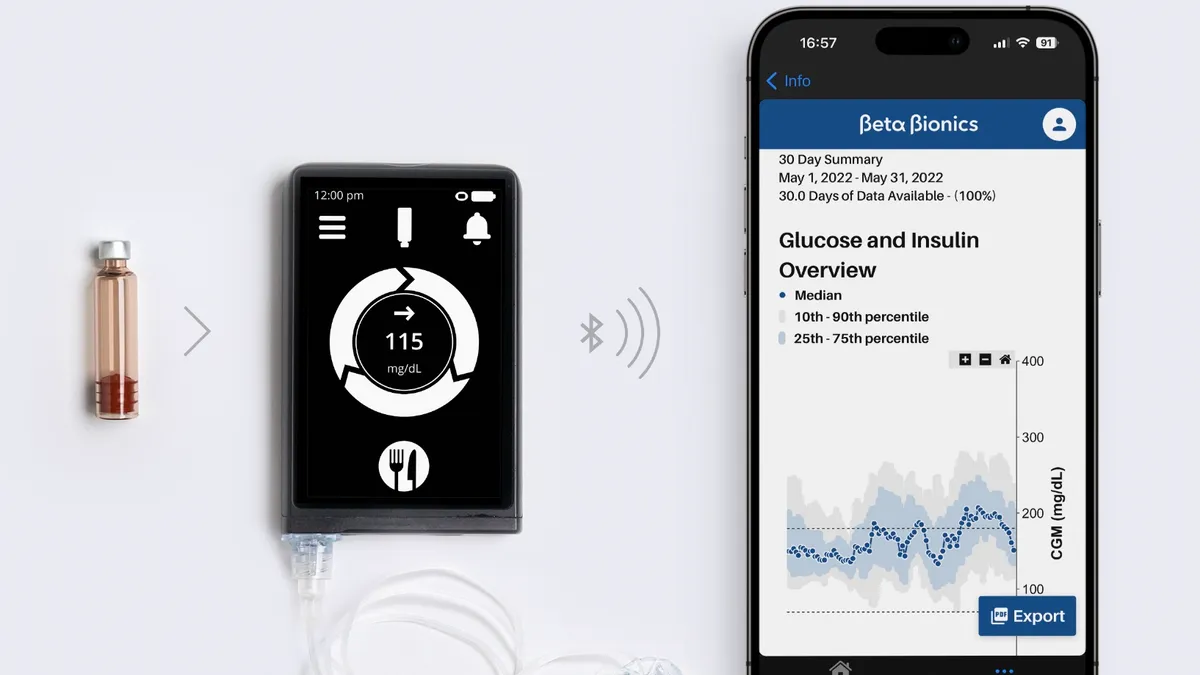

And then we continue to build out our analytics capabilities, in terms of some of our consulting services for how hospitals can optimize their [operating rooms] for scheduling. We also have analytics tools that are leveraging AI technology that are finding their way into our My Intuitive channel [an app] to be able to surface objective insights around relevant portions of surgery, which ideally will shorten a learning curve or improve patient outcomes.

How widely in use are these applications today?

For our Ion platform, it's absolutely essential for the behavior of the technology in the platform. It is part of every lung biopsy that there are 3D models created and paths that catheters can follow to do the distal lung biopsies. On the 3D modeling side within da Vinci, it's specific to specific organs and specific structures. We have a few early use cases in that, but it isn't necessarily something that is useful for every single surgery across the many different specialties and procedure types that are performed on da Vinci.

What are some next steps for AI in robotic surgery?

We are investing into expanding our capabilities in terms of being able to run segmentation algorithms on a broader set of anatomical structures from CT scans, so that they can be used in more of those procedures. We're incrementally building those out, [based] on how it fits into the surgeons’ pre-op planning stages and how it might really be validated in solving some of those problems. We see immense potential, which is why we continue driving and releasing and investing in those programs.

As we build advanced instruments with additional sensing capability, like the example I gave in stapler, as we roll out advanced imaging capabilities or abilities to tag tissue with four axis markers that our endoscope can pick up and display to surgeons, they all work together to bring holistic solutions to our surgeon and care team, and hospital customers.

How is AI helping to train surgeons?

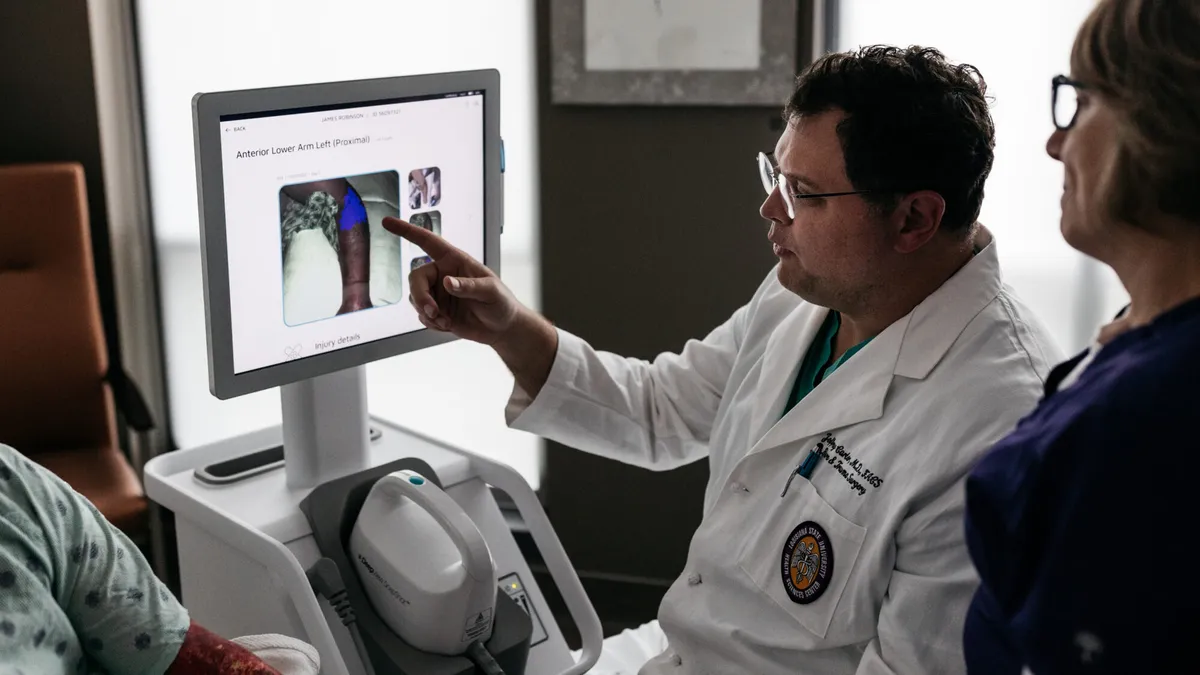

Core to some of our more recent work in surgical training is the ability to surface objective feedback from surgery. We can measure what happens during surgery, or we can use [machine learning] models to infer what happens in surgery, in terms of the different procedural steps. We can then quantify the behaviors of surgeons during those particular steps.

Surgeons can use that objective feedback in conjunction with their surgical coaches or the attendings or their peers, to be able to try to drive more personalized loops, whether it be outside [operating room] practice on SimNow [a surgical system simulator], whether it be reviewing additional videos of particular segments of surgery and best practices from other surgeons, etc.

In the emerging field of surgical data science, what role can Intuitive play?

This is a really exciting area, because objective metrics in surgery haven't really existed at scale, and the robotic platform facilitates measurement of those. AI technology facilitates extracting clinically meaningful objective metrics that are interpretable by a surgeon around a particular surgical activity. And we've been investing in not just building the technology, but also engaging the academic community in ushering in this technology.

We've been fortunate enough to partner with many leading academic universities in the U.S. and in Europe and in Asia, to be able to understand, how do we quantify surgery? How do we break it down into its critical steps? What objective metrics matter for which procedure type for assessing skill, objectively quantifying workflow and correlating to patient outcomes? They've been running their research that we've been enabling through the peer review process, and really establishing the foundation of the field.

What are the potential benefits of this technology for patients?

One area that our academic partners have been exploring, and we've been exploring, is the ability to fuse some of these objective metrics we can surface from the sensors on our robotic platform with patient data to make predictions about patient outcomes. You have a detailed readout of what happened during that really important surgical episode that you can pair with characteristics of that patient to make a prediction of how that patient might recover from surgery.

When we talk about some of our analytics products that are launching to our My Intuitive channel, we hope to scale those broadly across our procedure base. That said, we want to do it responsibly. That's one of the reasons why we have invested in collaborations and outfitted some of these academic institutions with the ability to vet the technology so that, as we introduce it, it actually has this ongoing validation element to it.